The Microsoft Copilot Stack is emerging as a powerful framework for companies developing solutions based on artificial intelligence. As organizations explore this innovative technology, understanding its architecture becomes essential for effective implementation.

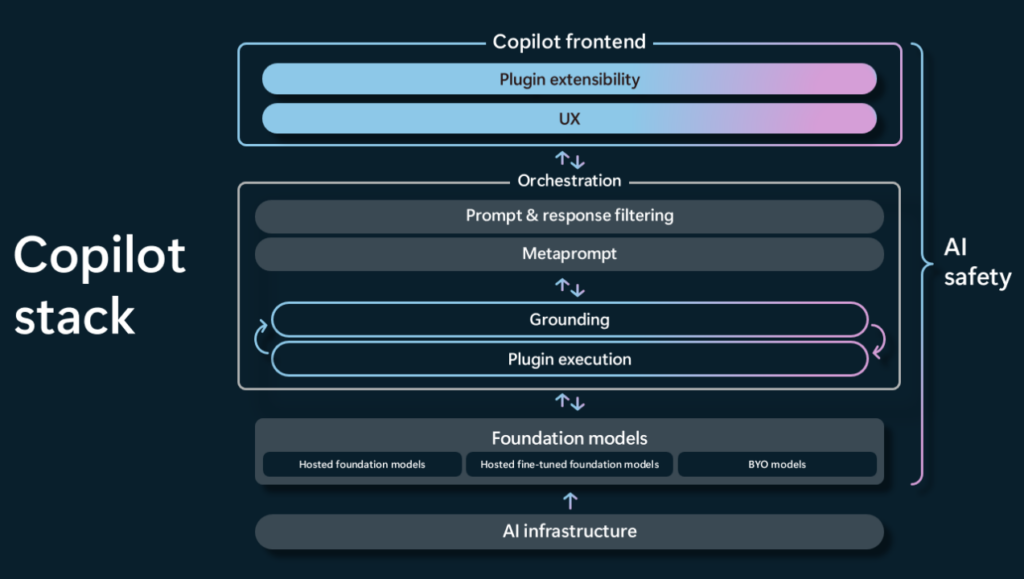

At its core, the Copilot Stack consists of four interconnected layers: User Experience, Orchestration, Execution, and Foundation Models. Each layer plays a vital role in building custom AI assistants capable of enhancing productivity and decision-making across various sectors.

In this article, we will clarify the features and role of the elements that make up the stack and take a look at the recent updates it has undergone.

Microsoft Copilot Stack: an introduction

The world of generative artificial intelligence is as exciting as it is complex, and it is not uncommon for companies across the globe to find themselves facing a scenario that may seem extremely confusing and constantly shifting overnight.

Most businesses trying to integrate AI into their enterprise applications do not know where or how to begin. With so many new technologies available, it is difficult to tell which ones are truly valuable and which might turn out to be a disaster.

In the Global AI Adoption Index 2023 report, IBM pointed out that the main causes behind the failure of AI projects include limited expertise in AI, data complexity, and ethical concerns.

For Independent Software Vendors (ISVs) and Microsoft partners, this challenge may feel even more daunting, given the vast size and complexity of the Redmond-based company’s technology ecosystem.

The fast and continuous pace of product innovation, combined with knowledge sharing that is sometimes vague or difficult to interpret, can lead to feelings of confusion, frustration, concern, and even denial.

Fortunately, there is a concept that can serve as a guide to simplify this process: the Copilot Stack. In the following sections, we will explore its components and how it can help us navigate the complex landscape of AI solution development and implementation.

What is Microsoft Copilot

Before we begin, let’s take a moment to bring everyone up to speed and briefly introduce the main focus of this article.

Copilot is the AI-powered digital assistant designed to simplify users’ daily tasks, boost productivity, and spark creativity. Its primary goal is to support code generation, writing assistance, and collaboration. Seamlessly integrated into popular Microsoft 365 apps such as Word, Excel, PowerPoint, Outlook, and Teams, Copilot offers contextual suggestions and helps users understand information effectively.

Microsoft Copilot is not just another productivity tool. It is a catalyst for a deep transformation in how we work and interact with technology. It represents a shift away from manual, repetitive tasks toward a future where humans and artificial intelligence collaborate. By freeing us from many surface-level tasks, Copilot enables us to focus on strategic thinking, creativity, and innovation.

Under the hood, Microsoft Copilot is powered by cutting-edge AI technologies. Everything starts with large language models (LLMs), particularly those in the GPT family developed by OpenAI, such as GPT-4. These sophisticated algorithms, trained on vast datasets of text and code, allow Copilot to understand and generate human language with remarkable fluency.

Microsoft further enhances these models with proprietary data and techniques, known as the Prometheus model, optimizing Copilot’s capabilities for ideal performance within the Microsoft 365 ecosystem.

But LLMs are only the beginning. Copilot also leverages machine learning, a branch of AI that enables systems to learn and improve over time without being explicitly programmed. This means Copilot gets smarter, adapting to your work style and preferences as you use it.

The capabilities of Microsoft’s AI digital assistant are aimed at a wide range of users and professionals, including developers, content creators, and knowledge workers looking for AI-powered support in their daily activities.

The main ways to benefit from Microsoft Copilot are:

- Adopting Copilot: Microsoft offers various Copilot assistants to boost productivity and creativity. Integrated into multiple Microsoft products and platforms, Copilot transforms the digital workspace into a more interactive and efficient environment.

- Extending Copilot: Developers can incorporate external data to streamline user operations and reduce context switching. This not only enhances productivity but also promotes better collaboration. Copilot makes it easy to integrate this data into the Microsoft tools you use every day.

- Building your own Copilot: In addition to adopting and extending, it is possible to create a custom Copilot for a unique conversational experience using Azure OpenAI, Cognitive Search, Microsoft Copilot Studio, and other Microsoft Cloud technologies. A custom Copilot can integrate business data, access external data in real time via APIs, and embed itself into enterprise applications.

Microsoft Copilot is available in various forms, with pricing packages tailored to different use cases, such as:

- Copilot (Free): The free version of Copilot provides access to generative AI features for PC management (in Windows), online search (in Edge), and general chatbot conversations on the web.

- Copilot Pro: Designed for individual users who want to get the most out of generative AI. For about 20 US dollars per month per user, you get access to Copilot in tools like Outlook, Word, Excel, PowerPoint, and OneNote.

- Copilot for Microsoft 365: Targeted at individuals and teams using Microsoft apps, this version includes access to Copilot Studio, enterprise-grade security, privacy and compliance, and advanced capabilities.

In addition, several Copilot solutions are tailored to specific Microsoft tools. For instance, there are Copilot integrations within Microsoft Dynamics for sales and customer service teams, and security Copilot solutions integrated into Microsoft Purview.

Microsoft has also begun rolling out and updating a series of “Agents” designed for specific business sectors such as finance, customer service, and marketing, with dedicated training and features for each area.

According to Microsoft, nearly 60 percent of Fortune 500 companies now use Copilot, and the number of employees using the AI assistant daily has nearly doubled from quarter to quarter. The company also reported that the number of Copilot customers has grown by more than 60 percent quarter over quarter, and the number of customers with over 10,000 seats has more than doubled in the same period.

Microsoft Copilot Stack: the 4 fundamental pillars

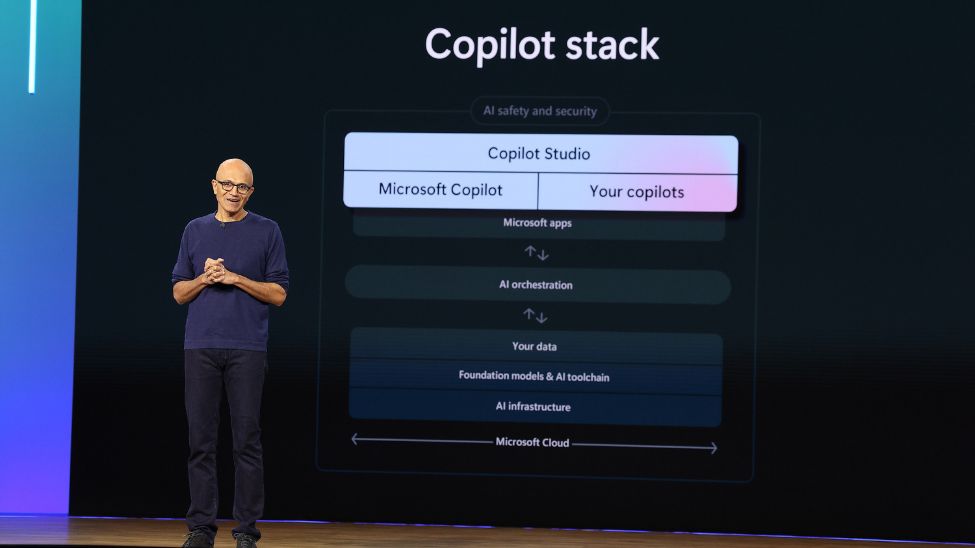

The Copilot Stack is an architectural model for building applications or custom copilots that deliver natural language-based experiences, enabled by the use of Large Language Models (LLMs).

It serves as the framework underlying every Microsoft Copilot product.

The rise of tools such as AI chatbots is transforming how users interact with software. Instead of navigating through multiple screens or executing numerous commands, users increasingly prefer to interact with an intelligent agent capable of completing tasks efficiently.

Microsoft quickly recognized the importance of integrating an AI chatbot into every application. After developing this common framework for building Copilot across different products, the company is now extending this approach to its developer community and Independent Software Vendors (ISVs).

In many ways, the Copilot Stack can be compared to a modern operating system.

It runs on a powerful hardware infrastructure, built on a combination of CPUs and GPUs. The foundation models serve as the kernel of the stack, while the orchestration layer plays a role similar to process and memory management. Finally, the user experience layer functions like the shell of an operating system, exposing features through an interface.

Let’s now take a closer look at how Microsoft has structured the Copilot Stack, without going too deep into technical specifics.

Infrastructure

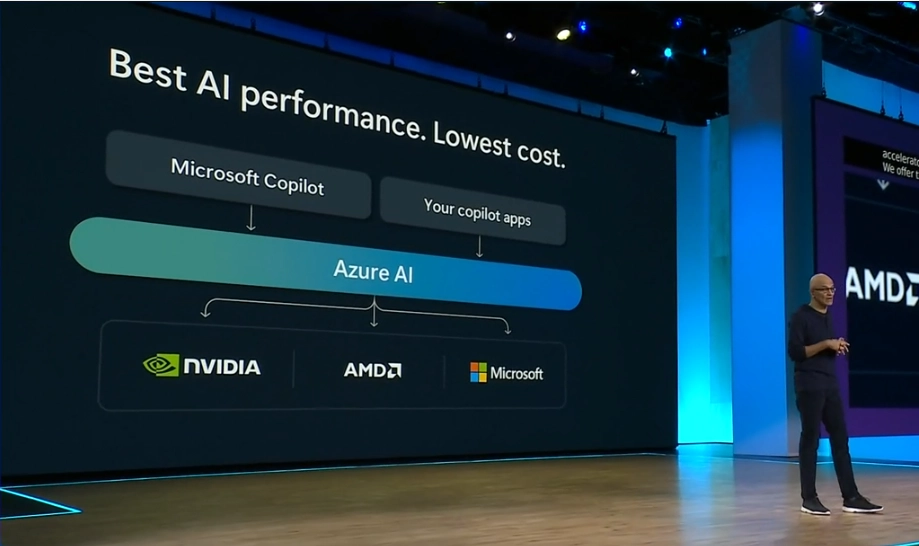

The AI supercomputer running on Azure, the public cloud, is the foundation of the platform. This purpose-built infrastructure, powered by tens of thousands of cutting-edge NVIDIA GPUs, delivers the computational strength needed to run complex deep learning models that can respond to commands in just a few seconds.

The same infrastructure powers the most successful app of our time: ChatGPT.

Foundation Models

Foundation models are the kernel of the Copilot Stack. They are large and complex artificial intelligence models trained on vast datasets to perform a variety of general tasks, such as understanding and generating natural language, images, or audio. These models can be applied across multiple industries and adapted to specific scenarios through a process known as fine-tuning.

They are trained on large corpora of data and are capable of handling diverse tasks. Examples of foundation models include GPT-4, DALL-E, and Whisper by OpenAI.

Some open-source LLMs such as BERT, Dolly, and LLaMa may also be part of this layer. Microsoft is partnering with Hugging Face to bring a curated catalog of open-source models to Azure. These foundation models rely heavily on the underlying GPU infrastructure to perform inference.

Although foundation models are powerful on their own, they can be adapted to specific domains. For instance, an LLM trained on a general corpus of text can be fine-tuned to understand terminology used in specialized fields such as healthcare, law, or finance.

Microsoft’s Azure AI Studio and Copilot Studio host a variety of foundation models, including customized models trained by third-party organizations on Azure.

Orchestration

This layer acts as the bridge between the underlying base models and the user.

Since generative AI relies on prompts, the orchestration layer analyzes the user’s prompt to understand the true intent behind it or behind the application’s query. It first applies a moderation filter to ensure the prompt complies with safety guidelines and does not lead the model to generate irrelevant or harmful responses.

This same layer also filters out model replies that do not align with the expected outcome.

The next step in orchestration is to enhance the prompt through meta-prompting, which means adding application-specific context. For example, the user may not explicitly ask for the response to follow a specific format, but the application’s UX might require that format to display the result correctly. Think of this as injecting application-specific information into the prompt to align it with the app context.

Once the prompt is built, additional factual data may be needed to ensure accurate responses. Without such data, LLMs may "hallucinate" and produce inaccurate or misleading outputs. This factual data typically resides outside the LLM domain, in external sources such as the web, databases, or object storage buckets.

Two main techniques are used to bring this external context into the prompt and help the LLM respond more accurately. The first uses a combination of word embedding models and a vector database to retrieve information and selectively inject relevant context into the prompt.

The second approach is to build a plugin that bridges the orchestration layer with the external source. ChatGPT uses this plugin model to retrieve data from outside sources and enrich its context.

Microsoft refers to these techniques as Retrieval Augmented Generation (RAG). RAG is designed to give LLM responses more stability and grounding by building prompts enriched with factual and contextual data.

Microsoft initially adopted the same plugin architecture used by ChatGPT to build rich prompts. Projects such as LangChain, Microsoft’s Semantic Kernel, and Guidance are now key components of the orchestration layer.

User Experience

The UX layer of the Copilot Stack redefines the human-machine interface through a simplified conversational experience. Many complex UI elements and nested menus will be replaced by a simple, discreet widget that sits in the corner of the screen.

This becomes the most powerful frontend layer for completing complex tasks, regardless of what the application does, from simple consumer websites to the most advanced enterprise applications.

Microsoft Copilot Stack: options for developing a custom “copilot”

Copilot applications use AI infrastructure, models, and data to deliver valuable tools and insights to business users, much like a restaurant uses its kitchen, staff, and ingredients (infrastructure, team, and supplies) to prepare dishes that satisfy customers.

These applications are the final products, whether they support customer service interactions, help create marketing content, or analyze sales data to provide strategic insights. They are the finished goods that business users rely on to meet specific needs, improve efficiency, or generate value.

As a Microsoft ISV or Partner, there are three main options for building a custom Copilot:

- Build a fully custom Copilot using Azure: This approach involves using the full Azure suite, including AI and machine learning tools, to develop an entirely customized AI solution from the ground up. It is suitable for ISVs or partners who require highly tailored solutions for specific industries or unique business processes.

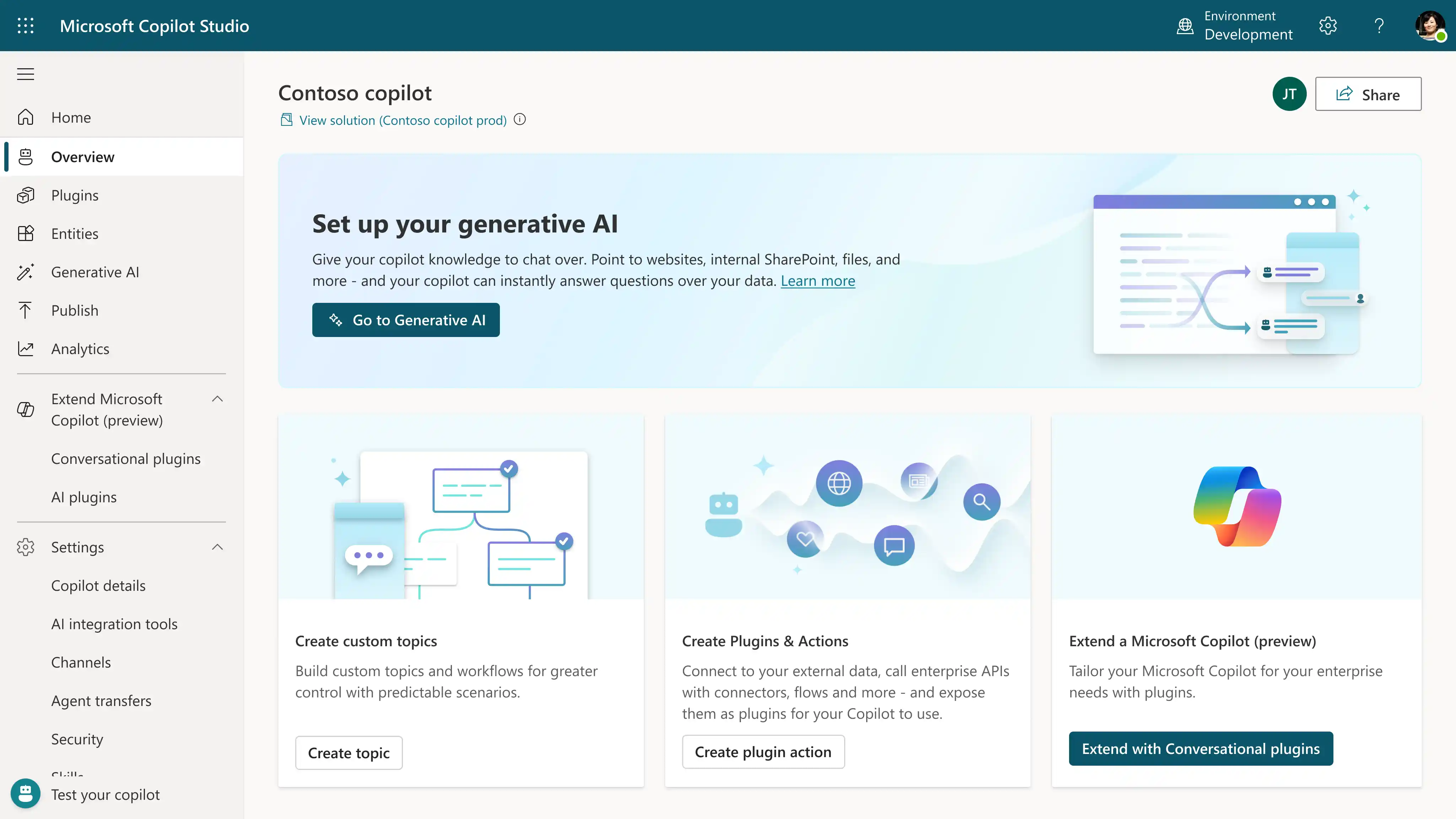

- Build on what is available in Copilot Studio: Copilot Studio provides a more structured environment with prebuilt templates and tools designed to integrate AI capabilities easily into applications. This approach is ideal for those who want a simplified development process with lower deployment complexity but still need a certain degree of customization.

- Use a combination of both to align with business goals: A hybrid approach takes advantage of both Azure’s powerful capabilities and the ready-to-use tools in Copilot Studio. This might be the best choice for organizations looking to balance customization with ease of use, allowing them to tailor AI features while accelerating development using existing resources.

What’s new in the Copilot Stack

At last year’s Microsoft Build, the company’s annual developer conference, the main topics were AI and Copilot, with the announcement of many more details and updates regarding the Copilot Stack. The company also introduced improvements to its various Copilot products, including support for the latest Gen AI models such as OpenAI’s GPT-4.

The main focus, however, was on developer tools, including enhancements to Copilot Studio (such as the ability to create extensions for other applications and custom agents for specific functions) and the broader Azure AI Studio, which now includes many models and has reached general availability, along with major changes to GitHub.

The biggest change to the Copilot Stack involves the inclusion of the Windows Copilot Runtime. This allows applications to call various AI components and, in the future, will enable AI features in more applications, many of which will run locally on Copilot+ PCs.

“What Win32 was to the graphical interface, we believe Windows Copilot Runtime will be to AI,” said Satya Nadella, CEO of Microsoft. This includes a new Copilot Library with over 40 models such as Phi-Silica and many other small language models (SLMs), along with APIs to integrate these models into applications and some no-code integrations for simpler apps.

Developers seem excited that PyTorch now runs natively on Windows powered by Direct ML, and that the Web Neural Network (WNN) API also runs directly on Windows.

Azure AI Studio, which had been previously announced but is now generally available, now offers many new models, including GPT-4, Microsoft’s Phi-3-vision small language model, and other Phi-3 models. In addition, models like Llama and those from Cohere, Mistral, and other startups are now extended through a partnership with Hugging Face.

As mentioned earlier, the company has introduced new features to make it easier to extend Copilot within Copilot Studio.

This includes a connector for Power Platform applications to various third-party tools such as Adobe, ServiceNow, Snowflake, and others. It will also support the creation of “Agents” that work on behalf of the user asynchronously and eventually automate long-term business tasks.

Microsoft also discussed the rollout of Azure AI services to more countries and the availability of the latest accelerators from Nvidia, AMD, and Microsoft’s own Maia. Overall, the Microsoft CEO stated that the cost of calling GPT-4 has become 12 times cheaper and six times faster since its launch (a good sign for developers concerned about expenses).

Conclusions

The field of Gen AI development is constantly evolving and supported by a vibrant community, but without a solid framework, it can quickly become confusing and frustrating, discouraging potential developers from leveraging the power of these new technologies for their projects.

That is why the introduction of the Copilot Stack has been a crucial step for the future of Microsoft’s AI assistant development. Having a clear map that outlines and provides a reference model for partners and developers is essential for ensuring that the Redmond company’s “copilot” can thrive in the future with the help of its community.

No one can say for sure what the future of Copilot will hold in the coming years, but for all developers who want to take part in it, a roadmap is already available to start setting the course toward its next milestones today.

FAQ on Microsoft Copilot Stack

What is the Microsoft Copilot Stack?

The Microsoft Copilot Stack is an architectural framework developed by Microsoft to support the development of conversational AI applications. It serves as the technical foundation for building intelligent assistants, known as "copilots", that integrate seamlessly with Microsoft 365 products and custom enterprise solutions. This stack simplifies the creation of natural language experiences while ensuring scalability, security, and consistency in adopting generative AI technologies.

What are the main layers of the Copilot Stack?

The Stack consists of four interconnected layers. At the base is the AI infrastructure on Azure, powered by advanced GPUs. Above that are the foundational models like GPT-4, which form the computational core. The orchestration layer interprets user prompts, manages external data integration, and handles responses. Finally, the user interface layer enables a streamlined conversational experience that transforms how users interact with software.

How does the Copilot Stack help developers?

By offering a clear architecture and reusable components, the Copilot Stack allows developers to focus on logic and context without rebuilding the AI infrastructure from scratch. It supports enterprise data integration, model adaptation for specific scenarios, and the implementation of security and control mechanisms.

Can I create a custom Copilot?

Yes, Microsoft offers multiple approaches. You can build a fully customized Copilot using Azure services, use Copilot Studio for a more guided low-code development experience, or combine both methods to balance flexibility and speed.

What is Copilot Studio’s role in the Stack?

Copilot Studio is the visual and simplified tool used to create, extend, and manage Copilot solutions. It helps build conversational interfaces, connect external services, and deploy asynchronous agents that perform complex tasks on behalf of users.

How does the Stack handle external data?

The orchestration layer analyzes user prompts and, when needed, enriches them with external data from APIs, databases, or the web. Techniques like Retrieval Augmented Generation (RAG) and plugins are used to enhance the context and accuracy of AI responses.

What are Foundation Models in the Stack?

Foundation Models are large-scale AI models such as GPT-4, DALL·E, or Whisper, designed to understand and generate language, images, or audio. These models are available in Azure AI Studio, including open-source options through Microsoft’s partnerships with platforms like Hugging Face.

What’s the difference between Copilot Pro and Copilot for Microsoft 365?

Copilot Pro targets individual users with access to generative AI features in Microsoft 365 apps through a monthly subscription. Copilot for Microsoft 365 targets enterprises and teams, offering access to Copilot Studio, advanced security, compliance, and large-scale deployment capabilities.

What are the latest updates to the Copilot Stack?

Microsoft has introduced Windows Copilot Runtime, a new Copilot Library with over 40 models, and new models like Phi-3. Tools such as PyTorch now run natively on Windows using DirectML. Copilot Studio supports more integrations with third-party apps, and the cost of running advanced models like GPT-4 has been significantly reduced.